MovieLabs has been busy in the last few months assessing cloud infrastructures (which in our definition includes private, hybrid and hyperscale service providers) and systems for their ability to support modern media workflows. Our review has been a broad assessment looking at the flow of video and audio assets into the cloud, between systems in clouds, and between applications required to run critical creative tasks. We’ve started from the very beginning of the content creation process – from production design through script breakdown on through every step in which assets are ingested into the cloud for processing—and then followed the primary movement of tasks, participants and assets across a cloud infrastructure, to complete a workflow.

Today, we’re publishing a list of gaps we have identified in that assessment—gaps between today’s reality and the 2030 Vision. Our intent is to create an industry dialog about how to close these gaps, collectively. MovieLabs is taking on project work in each of these areas (and we’ll share more on that later), but closing these gaps will require engagement from the whole community – creatives, tools providers, service vendors, studios and other content owners.

The MovieLabs 2030 Vision calls for software-based workflows in which files/assets are uploaded to the cloud (or clouds) and stay there. References to those assets are exchanged, and the assets are accessed by participants across many tasks. We’re not considering how a single task is carried out in the cloud – that is something which is generally possible today, and while there are benefits (such as enabling remote work in a pandemic), the migration to the cloud of a single task within a production workflow does not fully take advantage of the cloud. Instead, we’re discussing how the entirety of production workflows, every task and application, could run in the cloud with seamless interactions between them. The benefits of this are not only efficiency (less wasted time in moving and copying files), but also lower risk of errors, less task duplication, more opportunities for automation, better security, better visibility to workflow status, and more of the most precious production resources (creative time and budget) to apply to actual creative tasks that will make the content better.

So, let’s look at the current impediments we see to enabling more cloud-based workflows…

1) Much faster network connectivity is needed for ingestion of large sets of media files.

Major productions today generate millions of individual assets – from pre-greenlight through distribution. For cloud-based workflows, each asset requires “ingest” into a production store in the cloud. That includes not only camera image files and audio assets captured during active production, but all files created during production—the script, production notes, participant-to-participant communication, 3D assets, camera metadata, audio stems, proxy video files, and more.

As we look at these files, it’s clear that the smaller files are not a major concern for the industry. Many cloud-based collaboration platforms routinely upload a modest number of small files (<10MB) and do so with standard broadband connections, including cellular links. Indeed, some of this data is cloud-native (for example, chat files or metadata generated by SaaS apps) and do not need uploading at all.

However, today’s increasingly complex productions create huge volumes of data, often amounting to many terabytes at a time, which can cause substantial upload headaches. For example, a Sony camera shooting in 16-bit RAW 4K at 60fps will generate 2.12TB per hour in the form of 212,000 OCF files of approximately 10MB each. A multi-camera shoot with supporting witness cameras, uncompressed audio, proxies, high-resolution image files, and production metadata becomes a data hotspot streaming vast amounts of data into the cloud (or, more likely, multiple clouds). The volume of data will only increase as capture technology and production techniques evolve.

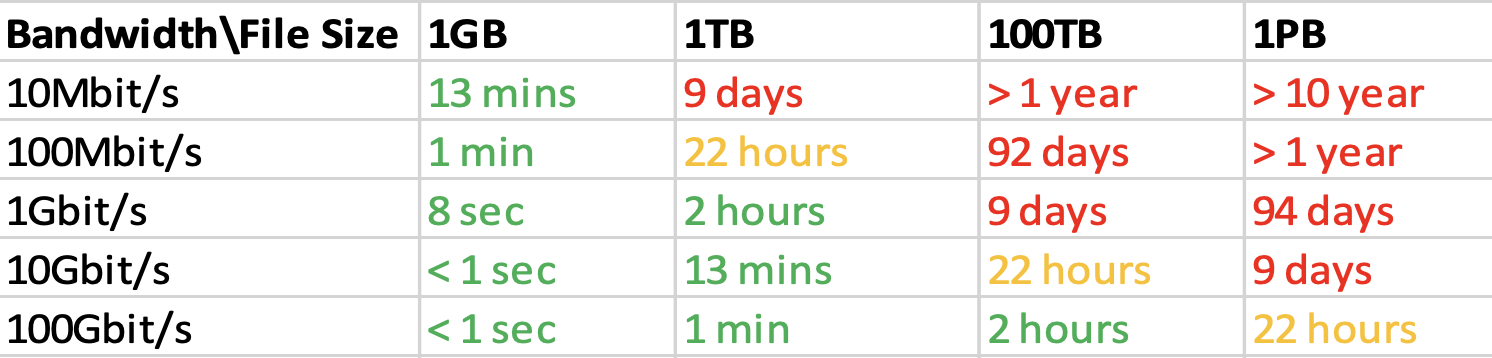

The table below illustrates the time required for file transfers using various internet connection speeds:

Table shows transfer time for various file sizes and different bandwidth speeds. Table can be used to estimate the bandwidth needed for a production based on requirements. E.g., if a production is shooting 2 hours of footage/day in ALEXA LF RAW, it will generate 4TB of data per day per camera. Anything less than a 1Gbit/s connection will be insufficient to keep up with the daily shoot schedule.

We’ve color-coded the table to indicate in green the upload times that would be generally acceptable, on-par with hard-drive-based file delivery services. Yellow indicates upload times around 24 hours, and red identifies times that are entirely impractical. While ultra-fast internet connections may not top the list of budget items for any production (especially on smaller independent projects), the faster the media can be ingested to the cloud, the faster downstream processes can start, accelerating and reducing overall costs.

There are multiple technologies available to mitigate the upload problem, i.e., ways to accelerate transfers or compress data, use of transportable drives, bringing compute to the edge, etc. Evaluation of these and other techniques is beyond this blog’s scope but suffice it to say that most cloud-based productions would benefit from having an uncontended upload and download internet connection of greater than 1Gbps.

Bandwidth, however, is not the only constraint on ingestion to the cloud. The ingest step must also include elements required to enable a software-defined workflow (SDW) downstream. That includes assignment of rights and permissions to files, indexing and pre-processing of media, augmentation of metadata, and asset/network security. These requirements need to be well-defined upfront so that ingested files can be accessed or referenced downstream by other participants and applications. Which leads us to …

2) There is no easy way to find and retrieve assets and metadata stored across multiple clouds.

As we have explored in other blogs (such as interoperable architectures), production assets could, and likely will, be scattered across any number of private, hybrid, and hyperscale clouds. Therefore, applications need to be able to find and retrieve assets across all clouds. Breaking this down, two key steps emerge. First, determining which assets are needed by a process. This is often a challenge, e.g., requiring knowledge of versions and approval status of that asset. And then second, determining where those assets are actually located in the clouds.

These should be considered separate processes, as not all applications need to perform both tasks. Bridging these processes in cloud-based workflows means that each asset needs to be uniquely identifiable so that applications can consistently identify an asset independent of its location and then locate and access the asset.

Architectural clarity on separating these functions is an important prerequisite to addressing this gap. It will also require the industry to develop multi-cloud mechanisms for resolving asset identifiers into asset locations and the integration of those mechanisms with workflow and storage orchestration systems, work that will likely take many years to complete.

3) We need more interoperability between commercial ecosystems.

In the early days of mobile data, what consumers could do with their data-capable cell phones was controlled by the cellular operators. Consumers were constrained by their choice of operator in the devices they could use, the services they could access, and the apps they could install. The connections between those commercial ecosystems were limited. That service model has fallen away because it constrained consumer freedom to go anywhere on the Internet, load any apps they chose, on any compatible device they chose.

We are still in the early days of cloud production and yet we’re seeing parallels with those constrained ecosystems from the early mobile internet. That means, for example, that production SaaS services today sometimes obscure where media files are held, allowing data to be accessed only through the service’s applications. As a result, cloud production systems can sometimes deliver less functionality than on-premises systems, which often include, as a basic function, the ability for any application to access and manipulate data stored anywhere on the network.

In any new and fast-changing environment, an internal ecosystem model can be a great way to launch new services fast, to deliver a great customer experience, and to innovate quickly. However, as these services mature, internal ecosystems can confront problems in scale that limit the broader adoption of new technologies and systems. For example, if file locations are not exposed to other online services, media must be moved out of one internal ecosystem and into another, in order to perform work. That could mean moving from the cloud to on-prem infrastructure and then back again or moving from one cloud infrastructure to another and then back again. Those movements are inefficient, costly and violate a core principle of the 2030 Vision, i.e., that media moves to the cloud and stays there with applications coming to the media. It also creates security challenges since every movement and additional copy of media must be secured and tracked, with security policies applied across workflows and identities also managed and tracked across ecosystems.

Today’s content workflows are too complex for any one service, toolset, or platform to provide all the functionality that content creators need. Therefore, we need easy and efficient ways for content creators to take advantage of multiple commercial ecosystems, with standardized interfaces and gateways between them that allow tasks and participants to extend across ecosystems and implement fully interoperable workflows.

To achieve the full benefits of the 2030 Vision, we envision a future in which commercial ecosystems include technical features :

- Files and/or critical metadata are exposed and available across ecosystems so that they can be replicated or accessed by third party services (for example, by way of an API).

- Authentication and authorization can also be managed across ecosystems, for example, providing the ability to share federated sign-on so that a single identity can be shared across services and enabling external systems to securely change access controls via API.

- Security auditing of actions on all platforms is open enough to allow external services with a common security architecture to track the authorized or unauthorized use of assets, applications, or workflows on the platform.

The 2030 Vision will require dynamic security policies that extend, and enable participant authentication, across multiple internal ecosystems, including granular control of authorization (e.g., access controls) to the level of individual participants, individual tasks, and individual data and metadata assets. That will require commercial ecosystems to incorporate a high level of interoperability and communication across ecosystems in order to deliver dynamic policies that change frequently to enable real-time security management for end-to-end production workflows.

4) We still must resolve issues with remote desktops for creative tasks.

Until all creative tools are cloud-native SaaS products, cloud media files will be manipulated most often using existing applications operating on cloud-based virtual machines. In a prior blog, we assessed several technical shortcomings in those technologies that prevent media ingested to the cloud from being manipulated in the same way as on local machines. These limitations and considerations were explored in our remote desktop blog and include problems such as lack of support for HDR, high bit depth video. and surround sound in remote desktop systems. Until we close those gaps, the ability to manipulate media files and collaborate in the cloud will be stunted.

5) People, software and systems cannot easily and reliably communicate concepts with each other.

The next key group of issues to resolve relate to communicating workflows and concepts. That communication could be human-to-human, but also human-to-machine and ultimately machine-to-machine, which will enable automation of many repetitive or mundane production tasks.

Effective software-defined workflows need standardized mechanisms to describe assets, participants, security, permissions, communication protocols, etc. Those mechanisms are required to allow any cloud service or software application to participate in a workflow and understand the dialog that is occurring. For example, a number of common words and terms of art are understood by context – slate, shot, and take, for instance. All have different meanings depending on their exact context, and it’s hard for machines to understand that nuance.

In addition to describing the individual elements of a production, we need to describe how elements relate to one another. These relationships, for example, allow a proxy to be uploaded in real-time and to stay connected to the RAW file original – which could arrive in the cloud hours or days later. Such a system needs to allow two assets stored on different clouds to be moved, revised, processed, deep archived and re-hydrated, all without losing connections to each other. The same is true of other less tangible elements such as production notes made on a particular shot – which must be related to the files captured on that shot and other information that could be useful later such as the camera and lens configurations, wardrobe decisions and even time of day and positions of the lighting. These elements and their relationships need to be defined in a common way so all connected systems can create and manage the connections between elements.

6) It is difficult to communicate messages to systems and other workflow participants, especially across clouds and organization

Software-Defined Workflows require a large amount of coordinated communication between people and systems. Orchestration systems control the many parts of an SDW by allocating tasks and assets to participants on certain infrastructures. For those systems to work, we need agreed methods for the component systems to coordinate with each other—to communicate, for example, that an ingest has started, been completed or somehow failed. By standardizing aspects of this collaboration system, developers can write applications that create tasks with assets, create sub-tasks from tasks, create relations between assets and metadata, and pass messages or alerts down a workflow that appear as notifications for subsequent users or applications. These actions require an understanding of preceding actions, plus open standards for describing and communicating those actions in order to deploy at scale and allow messages to ripple out throughout a workflow. As an example, if an EDL is changed that impacts a VFX provider, the VFX provider should be notified automatically when the relevant change has occurred.

Our objective here is to standardize the mundane integrations that do not differentiate a software product or service in order to enable interoperability, which then frees up developer resources to focus on the innovative components and features that truly do differentiate products.

7) There is no easy way to manage security in a workflow spanning multiple applications and infrastructure.

Our cloud-based approach (as explained in the MovieLabs 2030 Common Architecture for Production (CSAP) is a zero-trust architecture. This approach requires every participant (whether a user, automated service, application or device) to be authenticated before joining any workflow and then authorized to access or modify any particular asset. This allows secure ingest and processing of assets in the cloud. Realizing the benefits of this aspect of the 2030 Vision, however, also requires closing some key gaps.

When content owners allocate work to be done (either to vendors or within their own organization’s security systems), they select rights and privileges which typically are constrained to the cloud service or systems on which the work is occurring. In the case of service providers, the contract stipulates certain security protections and usually requires external audits to validate the protections are understood and implemented correctly. In addition, each of the major hyperscale cloud service providers also provide identity, authorization and security services for storage and services running on their clouds. Some of these cloud tools, but not all, extend across to other cloud service providers. The result is a potential hodgepodge of security tools, systems and processes that do not interoperate. Since complexity is the enemy of good security, security models and frameworks should identify and standardize commonalties now, before the security implementations get too complex.

Today the industry is in a quandary as to which security and identity services to use for authorizing and authenticating users to support workflows with assets, tools and participants scattered across multiple infrastructures. The MovieLabs CSAP was designed to provide a common architecture to deal with these issues in an interoperable manner and we’re working now with the industry to enable its implementation across clouds and application ecosystems.

8) There is no easy way to manage authentication of workflow participants from multiple facilities and organizations.

In today’s workflow a post-production vendor may require a creative user to login to a local workstation to work, with another login required to access the SaaS dailies platform to review the director’s notes, and a third login needed (with separate credentials) to run file transfers for assets to work on. In an ideal world, one login would be usable across all platforms with policies from the production determining permissions. Those policies along with work assignments and roles would seamlessly manage the user’s access to assets, tools and applications without requiring creation and maintenance of separate credentials for every system.

Our industry is unique in the number of independent contractors and small companies that are of critical significance to productions. A single Production User ID (PUID) system would make many lives easier, as well as allowing software tools to identify participants in a consistent way. This PUID system would make it much easier to onboard creatives to productions and remove them afterwards, with much lower chance of users forgetting or writing down on post-it notes the dozens of combinations of username and passwords for each system.

9) We will need a comprehensive change management plan to train and onboard creative teams to these new more efficient ways of working.

Many of these cloud-based workflow changes will require new or adapted tools and processes. Much of the complexity can be obscured from individual users, but there are always usability lessons, training, and change management issues to consider when implementing a new way of working. Productions are high-risk, high-stress endeavors, so we need to implement these systems and onboard teams without upsetting workflows. Developing trust amongst creative teams takes many years and experience in actual productions. The changes proposed here likewise will need considerable time to establish trust and convince creatives that they can securely power productions with better efficiency and improved collaboration. Fortunately, the software-defined workflows described here use the same mechanisms available in other collaboration platforms already widely used today – Slack for real-time collaboration, Google Docs for multi-person editing, Microsoft Teams for integrated media and calling. Those tools provide the model for real-time and rapid decision-making that we want to bring to media creation.

As the industry looks to ramp back up after the COVID shutdowns, it’s worth noting that the true potential of the cloud for production workflows was not exploited during temporary work-from-home tasks. If we can execute on a more collaborative view of entire production systems operating across cloud infrastructures, we believe we can “build back better” and enable far more efficiency in our new workflows.

If the industry can close these nine gaps, we will be closer to realizing a true multi-cloud-based workflow from end to end. Some of these challenges are beyond what any one company can solve (e.g., the availability of low cost, massively high bandwidth internet connections). Still, there are areas where we can work together to close the gaps. To that end, MovieLabs has been working to define some of the required specifications, architectures, and best practices and in subsequent posts, we will elaborate on some of these solutions in more detail.